From the very beginning, EPAM had specialists from various countries who did interesting Data projects. Eight or nine years ago, thanks to the emergence of Big Data, the field of data processing began to change greatly. Even then, it became clear that it was necessary to focus on this competence in order to occupy a leading position in the market. Later, EPAM came to the conclusion that traditional and new technological skills should be used together when working on a project and merged them into Data Practice.

Combining different departments into one Data Practice was in line with market trends, when they started to talk about data as a whole, without separating technologies. And today, EPAM's Data Practice employs over 3,300 people from twenty-six countries in 102 cities around the world, working on a variety of projects.

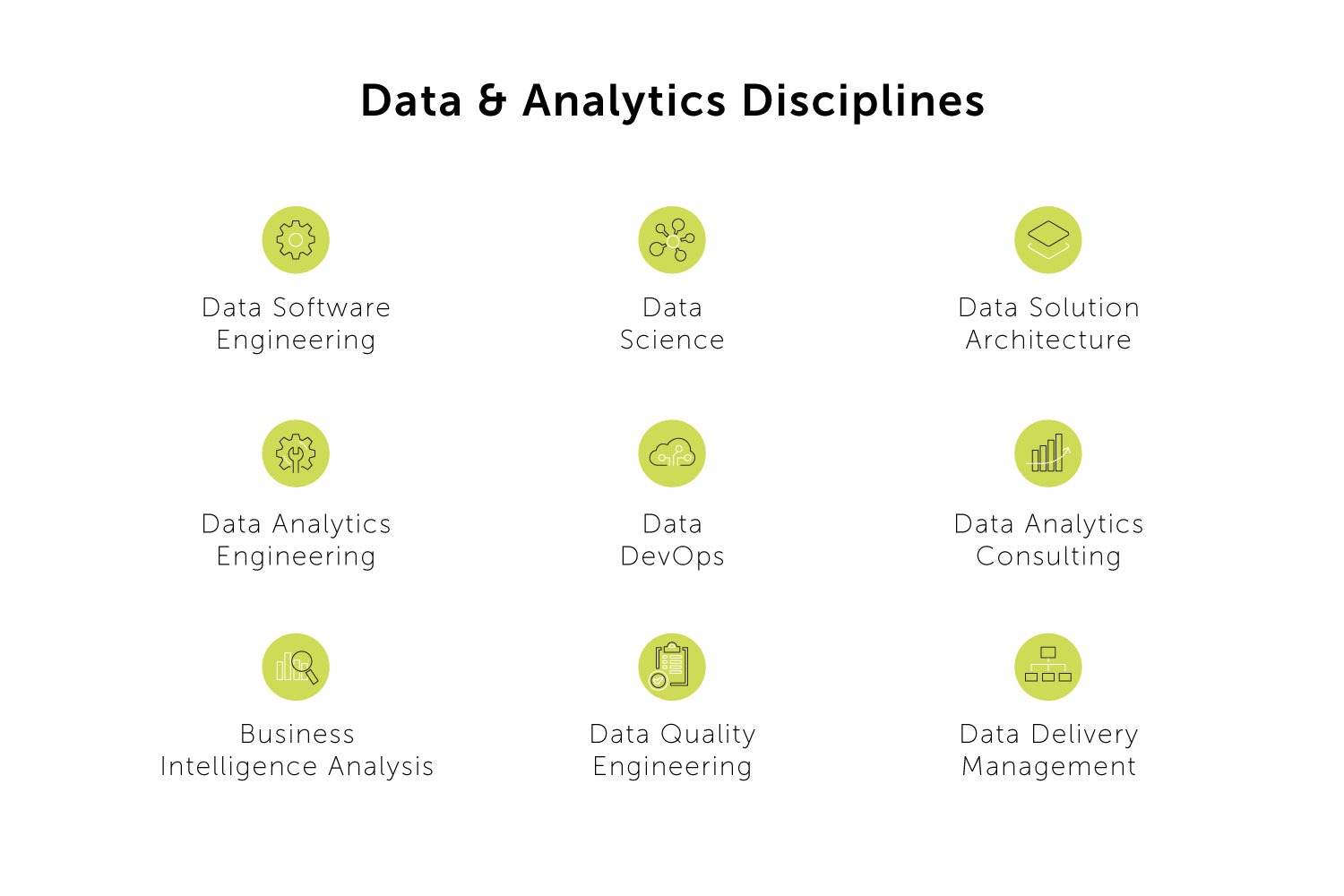

What are the disciplines that make up Data Practice?

The nine main practice disciplines correspond to what Data services are in demand in the market today.

How Data Practice is organized at EPAM?

Almost 90% of all Junior Professionals in this field come to EPAM through training programs in all locations. We need to meet the increasing demand for Data Professionals as the company has more and more projects. And today's Junior Specialists are tomorrow's highly qualified employees. We train students in the areas of Data Software Engineering, Data Analytics Engineering, Data Quality Engineering, Data Science, and Data DevOps.

What do specialists in these areas do?

- Data Software Engineers and Data Analytics Engineers collect and process data, run processes and build services to turn that data into a Data product.

- A Data Quality Engineer combines engineering tasks with data analysis and testing elements in his or her work. At some point, it became clear that traditional testing was still not the discipline that could provide the necessary data quality control on our platforms. Therefore, a separate direction was allocated.

- A Data Science Engineer structures and analyzes large amounts of data and predicts events.

- A Data DevOps Engineer works with distributed systems and processes complex data in parallel. He or she must keep in mind a lot of connections between system components and analyze and fix problems.

What is Data Analytics Engineering?

Data Analytics Engineering (DAE) is the technology and tools for collecting, processing, and visualizing information, as well as organizing data warehouses. DAE experts help businesses analyze key metrics and make data-driven decisions.

What do Data Analytics Engineering experts do?

A Data Analytics Engineer converts data into information that the end user can understand and use with the help of Excel, for example. A Data Analytics Engineer works at all stages of the product lifecycle, including data collection and analysis, product development, and support.

There are many types of products and product lifecycles in Data Analytics Engineering. Therefore, there are diverse types of Data Engineers:

- A Data Analytics & Visualization engineer deals with data analysis and visualization.

- Data Integration, DBA & Cloud Migration engineers are responsible for creating the ETL pipeline, uploading to the Data Warehouse, and working with the Cloud.

What will the students learn at the training?

First, they will understand what data is, how to work with it, why customers come to us and which their problems are solved by a Data specialist. During the training, students will learn how to model databases; analyze raw data, then clean and load it into storage; visualize data in an optimal way; and get acquainted with cloud technologies.

- Warehousing, or development and configuration of databases. Students will thoroughly learn the basics of database modeling, learn to write complex queries in the SQL language, and practice creating Data Warehouses on one of the relational DBMS (Microsoft SQL Server).

- Extract, Transform, Load processes. Students will learn how to collect and process data using various tools such as SSIS or Azure Data Factory.

- Reporting & Visualization. Students will learn how to visualize information and present tabular data in graphs using PowerBI, Tableau and other tools.

What are the requirements for candidates?

We are waiting for candidates who:

- Have basic knowledge of relational DBMS theory.

- Have a basic knowledge of SQL.

- Know English at B1 (Intermediate) level and above.

- Can devote 10–15 hours a week to training in the first stage and 20–30 hours in the second stage.

Knowledge of programming languages like Python, JavaScript, and C# will be an advantage.

For those who have decided to qualify for the training

- Take time for self-study materials. Chech out the useful links in the recommended article from our blog.

- Soberly evaluate your skills and be honest in the interview. Only the most motivated, who are really interested in working and studying, will go further.

- Don't study for others, study for yourself. It's not a "pass and forget" kind of thing. All the knowledge you get in training will be the basis of your career. It is all necessary; none of it can be excluded. All of them are necessary; none of them can be excluded.

Want to start a career in Data Analytics Engineering? Sign up for free trainings from EPAM!